Building a virtual robot

In this post, I’ll be describing the first stage in getting a working representation of the, as of yet unnamed robot, into the ROS world. I will also be briefly exploring the MoveIt! library, as this might be a useful tool for the future.

The virtual representation of the Bioloid will be built using CAD models of all the individual servos and support brackets. The robot will be loaded up in RViz, where its links an joints will be manipulated. Inverse kinematics will hopefully be calculated by the MoveIt! package later on. But first, a definition is needed of how the joints and links are all connected, and how to move between their frames of reference. The individual CAD components will be oriented correctly onto these frames.

On a side note, I had the chance to photograph the Bioloid in its current state, and also had a Raspberry Pi 2 delivered!

The Bioloid, currently awaiting a heart and brain transplant.

Raspberry Pi 2 delivered! This may become the ROS brain in the near future.

After some background reading, I found out that the best way to create this representation in ROS is by using a standardised XML format file called the Unified Robot Description Format (URDF).

CAD models

In the past I had made a start on drawing a CAD model of the Robotis servo, but since then Robotis has released CAD drawings of all their robot kits online here (old link).

I tidied up the .stp files using FreeCAD, by removing some placeholder parts or sub-parts which would be of no use (e.g. screws, gears and electronics on the inside of the servos and CM-5). I then converted the files into .dae (Collada) format, and imported them into Blender to add some colour textures. For some reason, saving again as .dae in Blender shrunk the model dimensions by 100. I haven’t worried too much about the actual component size at the moment, as only their relative scales have to be correct for now. There is also a bug in RViz which replaces the ambient color of Collada materials by light grey if at least one component of the specified ambient color is 0. To fix this, I manually edited the file and replaced all 0’s with 0.1 in the “ambient” tags. Below are some of the main components, as rendered in Blender.

AX-12

FP04-F1

The Unified Robot Description Format

The URDF file itself is written in a plain markup language. As the definition of various links is written more conveniently with some basic maths, and because many bits of code will inevitably be repeated or recycled (e.g. the mirroring of the left-hand side based on the right-hand side), ROS has a macro language called xacro, which makes it much easier to create and maintain URDF files. A URDF is generated from a xacro file using:

rosrun xacro xacro.py model.xacro > model.urdf

Creating the file for the Bioloid took a fair while, as I created all the translations by eye without knowing the actual distance measurements between the various links, but rather by relying on the CAD components as each one was placed in the chain. I started with the right side of the model, first with the easier arms, then with the legs.

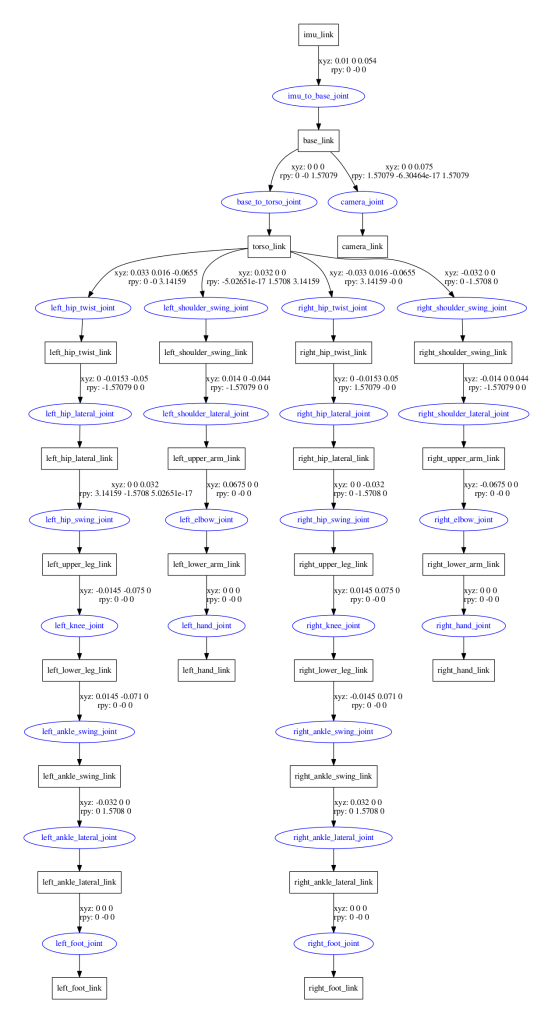

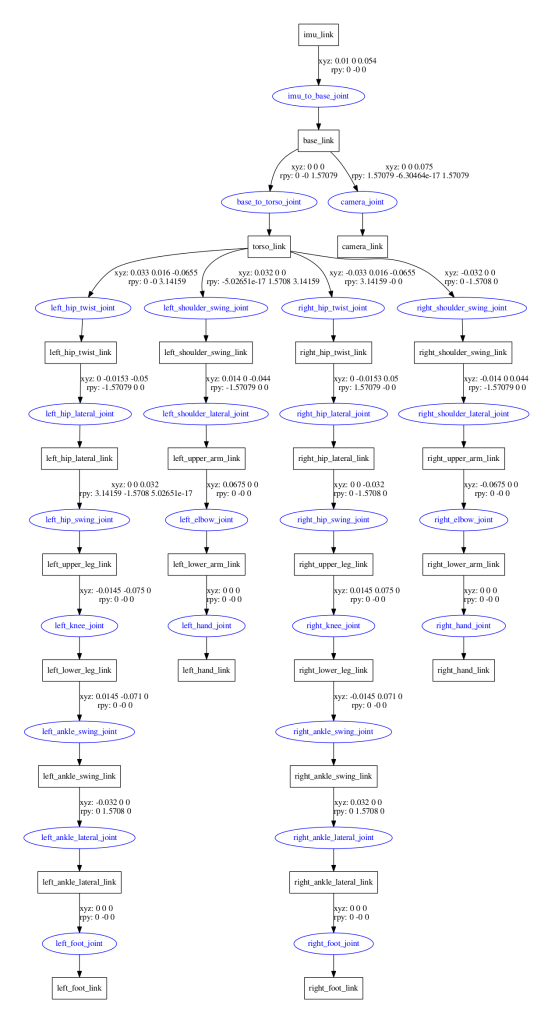

The validity of the URDF file can be checked with teh check_urdf tool. Another great tool for visualising the final result is urdf_to_graphiz, which generates a diagram of the joint and link tree. The tree of my model is shown below. Each joint (blue circles) is positioned with respect to its parent link (black rectangles), and the following/child link is positioned has the same origin as the joint, as shown here. The xyz and rpy labels next to the arrows show the translation and rotation required to get from parent frame to child frame, or in other words, it is the representation of the child’s frame with respect to the parent’s frame. You will also notice the addition of the IMU link, as well as an additional camera link, although this is just a placeholder for a possible camera in the future, and at the moment won’t be used.

Graphviz diagram of URDF file

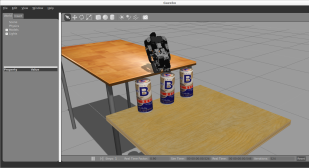

The robot in RViz

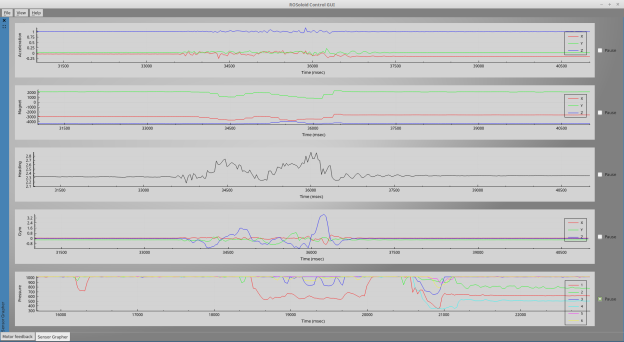

The current state of the robot is shown in the screenshots below. The robot is displayed in RViz with the help of the ROS joint_state_publisher and robot_state_publisher. It is fully articulated and the individual joints can be moved with the help of the GUI which joint_state_publisher provides. The joint states will later be published from the joint values read by the real Bioloid servos. In addition to the kinematic model, I created bounding boxes around the components for the collision boundaries, which are shown in red. This was after the fact I realised that without them, the MoveIt! plugin would use the full CAD geometry in its collision detection routines, which made it almost grind to a halt!

Model front view

Transform frames front view

Collision bounding boxes front view

Model side view

Transform side front view

Collision bounding boxes side view

Integration with MoveIt!

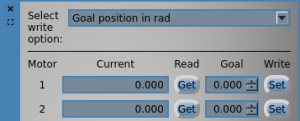

I have only played around with MoveIt! briefly so far, but the results seem very promising. The library has a useful graphical setup assistant, which essentially enhances the URDF with a Semantic Robot Description Format (SRDF) file. The URDF only contains information about how the joints and links are arranged, as well as some other information such as joint limits, and the visual and collision data. The SRDF doesn’t replace the URDF, but exists separately and contains other information, such as further self-collision checking, auxiliary joints, groups of joints, links and kinematic chains, end effectors and poses. So far I haven’t found any need to edit the SRDF directly, as it can be generated and edited by the setup assistant.

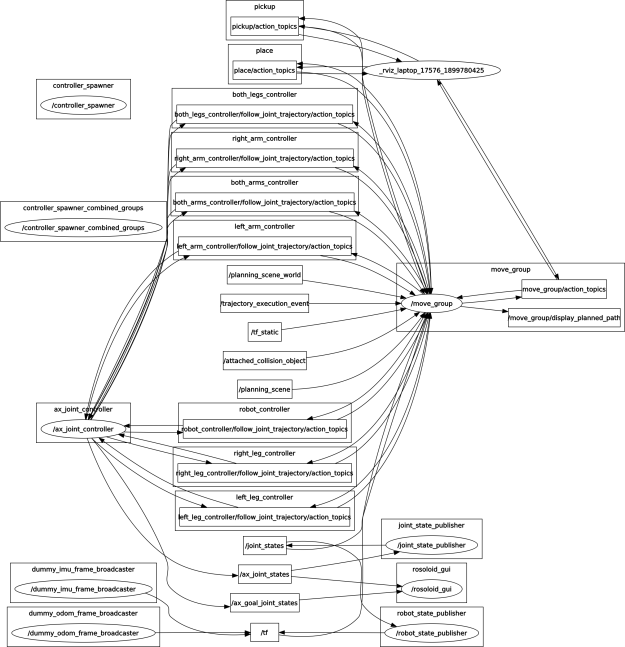

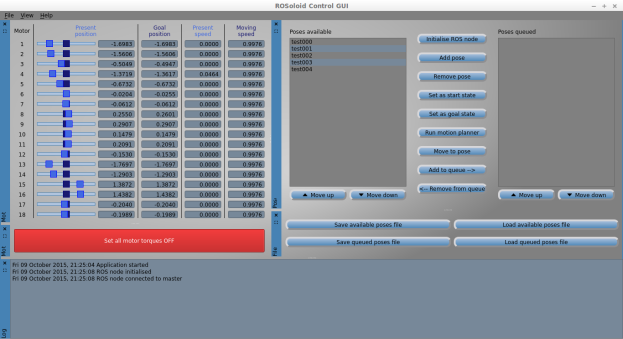

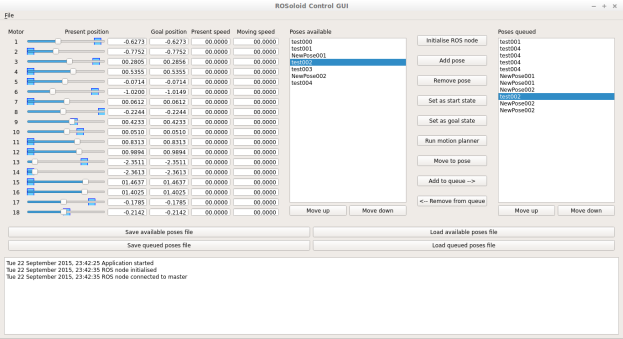

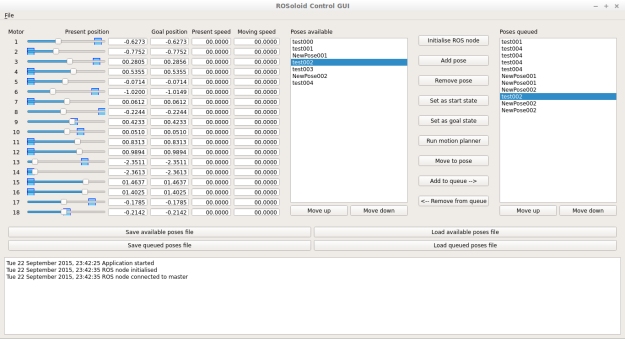

The assistant generates a new ROS package with various templates for path planning and visualisation, which is done via an RViz plugin. So far I have managed to interact with the virtual Bioloid’s arms and legs, in a similar way shown in this PR2 robot tutorial. The aim will be to later on create some poses and walking gaits which I can try out on the real robot, but that is all for now!

Bioloid in MoveIt!

Self-collision checking optimisation in MoveIt! Never in collision

Self-collision checking optimisation in MoveIt! Adjacent links

Planning groups in MoveIt!

Moving an arm in MoveIt!

Moving a leg in MoveIt!

Useful links

URDF:

MoveIt!:

RViz scaling and ambient colour issues: